Anonymizing machine learning data has become a foundational requirement for modern artificial intelligence. As organizations collect richer datasets, privacy risks increase at the same pace. Customer behavior logs, health records, transaction histories, and location signals all contain traces of real people.

That reality creates pressure.

Teams want accurate models. Regulators demand privacy protection. Users expect their information to stay safe. Fortunately, these goals can coexist when data anonymization is designed correctly.

This article explains how privacy-preserving data practices fit into machine learning pipelines, how anonymization techniques work, and how teams can reduce risk without sacrificing insight.

Why Privacy Protection Matters in AI Pipelines

Machine learning thrives on detailed data. However, detail increases exposure.

Even when obvious identifiers are removed, individuals can still be recognized through combinations of attributes. This risk grows as datasets expand and external data sources multiply.

As a result, privacy breaches often occur unintentionally.

Anonymizing machine learning data reduces that exposure. It protects individuals while also protecting organizations from legal, financial, and reputational harm.

Privacy is not just compliance. It is credibility.

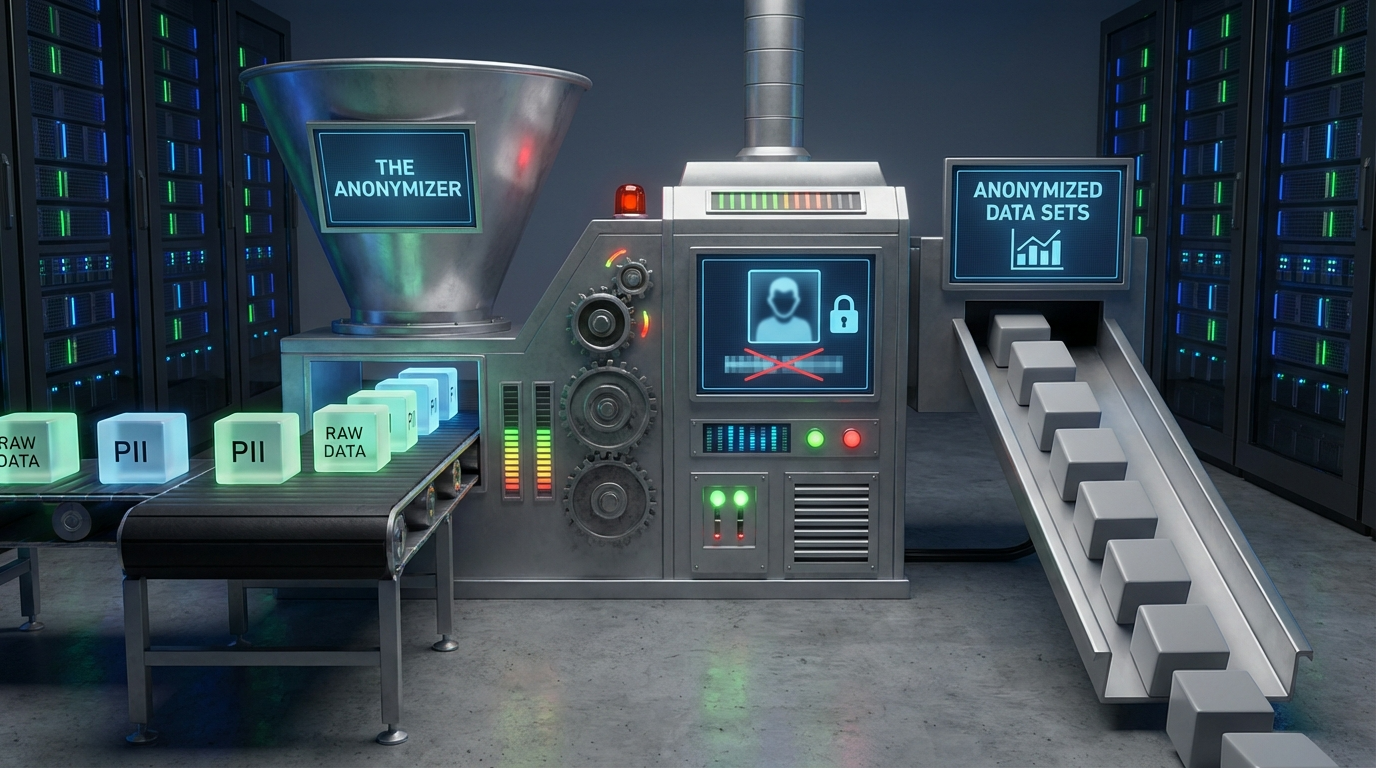

What Data Anonymization Actually Involves

Anonymization goes beyond removing names or IDs. That basic step, often called pseudonymization, rarely provides sufficient protection.

True anonymization ensures individuals cannot be re-identified using reasonable effort, even when external data is available.

This distinction matters deeply in AI.

Seemingly harmless attributes like age, location, or job title can combine to reveal identity. Therefore, anonymization must consider the full data landscape.

Effective anonymization is systematic, not cosmetic.

Where Privacy Risks Enter Machine Learning Workflows

Privacy risks appear at multiple stages.

Data collection introduces raw exposure. Data aggregation strengthens identifying patterns. Feature engineering may encode sensitive traits indirectly.

Even trained models can leak information. Attacks may reveal whether a specific person’s data was included.

Anonymizing machine learning data early reduces downstream risk. Prevention is far less costly than remediation.

Ignoring early risks compounds problems later.

Regulations Driving Privacy-Safe Data Practices

Global privacy laws have reshaped AI development.

Regulations such as GDPR, CCPA, and HIPAA impose strict standards. Penalties for violations can be severe.

Properly anonymized datasets often fall outside regulatory scope. That advantage simplifies compliance and data sharing.

However, regulators evaluate anonymization claims carefully. Weak techniques do not qualify.

Anonymizing machine learning data correctly supports lawful innovation.

Balancing Privacy and Model Utility

Anonymization introduces trade-offs.

As privacy protections increase, data precision may decrease. Over-anonymization can weaken model performance.

The goal is balance.

Think of anonymization like frosted glass. Shapes remain visible, but individual details disappear.

Successful anonymizing machine learning data strategies preserve patterns while removing identity.

Achieving this balance requires thoughtful design and testing.

Core Techniques for Anonymizing Machine Learning Data

Several techniques support privacy protection.

Masking replaces sensitive values. Generalization reduces precision. Noise injection adds randomness. Aggregation summarizes groups.

Each method serves a different purpose.

In practice, anonymizing machine learning data often combines multiple techniques.

The right mix depends on data type, risk tolerance, and use case.

Group-Based Privacy With k-Anonymity

k-anonymity ensures each record resembles at least k-1 others. Individuals blend into groups rather than standing alone.

This approach reduces re-identification risk effectively in many cases.

However, k-anonymity alone may fail when attackers have background knowledge.

Despite limitations, it remains a useful building block.

Many anonymizing machine learning data workflows begin here.

Enhancing Protection With l-Diversity and t-Closeness

l-diversity strengthens group privacy by ensuring sensitive attributes vary within each group.

t-closeness goes further by aligning group distributions with overall population distributions.

These methods reduce inference risk but increase complexity.

Data utility may decline if applied aggressively.

Anonymizing machine learning data requires evaluating whether these enhancements align with project goals.

Differential Privacy in Modern ML Systems

Differential privacy offers mathematically grounded protection.

It adds carefully calibrated noise so individual contributions cannot be isolated.

This approach scales well for analytics and learning tasks.

Large technology companies rely on it extensively.

Anonymizing machine learning data with differential privacy provides strong guarantees, though tuning requires expertise.

Synthetic Data as a Privacy-Preserving Alternative

Synthetic data generates artificial records that reflect real statistical properties.

Because no real individual is referenced directly, privacy risk drops significantly.

However, quality varies.

Poorly generated data may leak patterns or distort distributions.

Anonymizing machine learning data through synthetic generation requires rigorous validation to ensure realism and safety.

Embedding Anonymization Into the Data Pipeline

Privacy protection should not be an afterthought.

Ideally, anonymization occurs early in the pipeline. Raw data enters restricted zones. Privacy transformations happen before wide access.

Feature engineering must respect privacy constraints. Logs and backups require equal care.

Anonymizing machine learning data works best when treated as infrastructure rather than a patch.

Monitoring Re-Identification Risk Over Time

Privacy risk is not static.

New datasets appear. External data grows. Attack techniques evolve.

Regular testing helps identify emerging vulnerabilities.

Simulated re-identification attempts reveal weaknesses before harm occurs.

Anonymizing machine learning data requires continuous vigilance.

Privacy is a moving target.

Privacy Risks During Model Training

Models themselves can leak information.

Overfitting increases memorization. Inference attacks exploit that behavior.

Regularization techniques help reduce leakage.

Privacy-aware training complements dataset anonymization.

Defense works best in layers.

Governance for Responsible Data Anonymization

Tools alone cannot guarantee privacy.

Clear policies define acceptable use. Access controls limit exposure. Documentation supports audits.

Cross-functional oversight strengthens decisions.

Anonymizing machine learning data succeeds when governance aligns technical practice with organizational values.

Team Awareness and Privacy Culture

People influence outcomes.

Engineers must understand privacy principles. Analysts should recognize risk signals.

Training builds shared understanding.

Anonymizing machine learning data improves when privacy becomes part of team culture rather than a compliance chore.

Common Pitfalls in Data Anonymization

Several mistakes appear repeatedly.

Relying on simple masking creates false confidence. Ignoring indirect identifiers leaves gaps.

Excessive anonymization destroys value. Insufficient anonymization invites harm.

Balance matters.

Anonymizing machine learning data requires iteration, testing, and review.

Measuring Success Beyond Compliance

Success involves more than regulatory approval.

Trust matters. Model performance matters. Stakeholder confidence matters.

Metrics should include privacy risk reduction and utility retention.

Anonymizing machine learning data supports sustainable AI when measured holistically.

The Future of Privacy-Preserving Machine Learning

Privacy expectations will continue to rise.

Techniques will mature. Tooling will improve. Standards will tighten.

Organizations that invest early gain resilience and trust.

Anonymizing machine learning data prepares teams for that future.

Conclusion

Data powers intelligent systems, yet privacy sustains trust. These forces must work together.

Anonymizing machine learning data enables responsible innovation. It protects individuals while preserving insight. It reduces risk while supporting growth.

When privacy becomes a design principle instead of an obstacle, AI earns confidence rather than suspicion.

Privacy is not the enemy of progress. It is its foundation.

FAQ

1. What does anonymizing machine learning data mean?

It means transforming data so individuals cannot be identified directly or indirectly.

2. Is anonymized data always exempt from privacy laws?

Often yes, but only when anonymization is robust and irreversible.

3. Does anonymization always reduce model accuracy?

Not necessarily. Careful techniques preserve most useful patterns.

4. How often should anonymization methods be reviewed?

Regularly, especially as new data sources or attack methods appear.

5. Can synthetic data replace real data completely?

In some cases, yes, but validation and quality control are essential.