Artificial intelligence isn’t just reshaping industries—it’s reshaping decisions that affect real people. From hiring and lending to healthcare and policing, algorithms now play a crucial role in determining outcomes that once relied solely on human judgment. But with that power comes an urgent question: are these systems truly fair and ethical?

AI ethics and algorithmic bias are at the heart of this debate. As AI becomes more integrated into society, the need to understand how it can amplify inequality or reinforce prejudice is more important than ever. Let’s explore what AI ethics means, why algorithmic bias happens, and what can be done to build technology that serves everyone—fairly.

The Meaning of AI Ethics

AI ethics is about more than just programming rules—it’s about principles that guide how artificial intelligence systems should behave. At its core, AI ethics seeks to ensure that technology respects human values, safeguards rights, and operates transparently.

Think of it like teaching morality to a machine. If AI is going to make decisions that affect lives, it must do so within a framework that promotes fairness, accountability, and respect.

The most widely accepted principles of AI ethics include:

- Fairness: AI should make unbiased decisions.

- Transparency: People should understand how decisions are made.

- Accountability: Developers and organizations must take responsibility for outcomes.

- Privacy: Data should be protected and used responsibly.

- Beneficence: AI should benefit humanity and avoid harm.

When these principles are ignored, the results can be devastating. Algorithms may unintentionally discriminate or make decisions that no one can explain—creating what’s often called the “black box” problem.

What Is Algorithmic Bias?

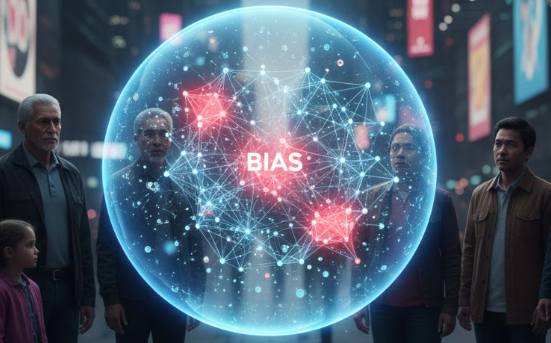

Algorithmic bias occurs when artificial intelligence systems produce results that are systematically unfair to certain groups or individuals. It happens when the data or logic used to train these systems reflects human prejudices, social inequalities, or flawed assumptions.

Let’s make it simple. Imagine an AI hiring system trained on resumes from a company that historically hired mostly men. Even without explicit sexism in the code, the AI learns to favor male candidates—replicating old biases in new ways.

Bias isn’t always intentional, but it’s always impactful. It can influence who gets a loan, who receives medical treatment, or even who gets stopped by law enforcement. The scary part? Many of these biases are invisible until the harm is already done.

How Bias Creeps into AI Systems

Bias doesn’t magically appear inside algorithms—it’s introduced during the design and data stages. Understanding where it comes from is the first step in eliminating it.

1. Biased Data Inputs

AI learns from historical data, and that data often reflects societal inequalities. If past hiring records, medical outcomes, or crime statistics are biased, the AI will inherit those same biases.

For example, an AI trained on healthcare data might underestimate pain levels for women or minority patients simply because past medical records did too. The machine doesn’t know it’s being unfair—it just follows the data.

2. Imbalanced Training Sets

When datasets don’t include diverse samples, AI models perform poorly for underrepresented groups. A facial recognition system trained mostly on light-skinned faces might fail to recognize darker skin tones accurately.

This isn’t just theoretical—it’s been proven. Studies by MIT and the Algorithmic Justice League revealed that popular facial recognition systems misidentified darker-skinned women up to 35% more often than lighter-skinned men.

3. Flawed Algorithm Design

Sometimes, the problem lies in the model itself. Developers may use proxies or variables that unintentionally encode sensitive information like race, gender, or location. For instance, using postal codes in an algorithm can indirectly lead to racial bias due to geographic segregation.

4. Human Oversight Failures

AI systems are designed by people—and people have biases. If teams lack diversity or ethical oversight, unconscious biases can slip into design choices, testing, and deployment.

As a result, algorithms built to be “objective” often end up reflecting the very prejudices they were supposed to eliminate.

The Real-World Impact of Algorithmic Bias

Bias in algorithms isn’t just a technical flaw—it’s a societal issue with real-world consequences. When unchecked, it reinforces inequality rather than dismantling it.

1. Hiring and Employment

Recruitment algorithms can inadvertently favor men over women or certain ethnic groups over others. Amazon famously had to scrap its AI hiring tool after discovering it downgraded resumes that included the word “women’s,” such as “women’s chess club.”

2. Criminal Justice

Predictive policing tools, used to anticipate crime hotspots, often target neighborhoods with higher minority populations due to biased historical data. This creates a feedback loop—more policing leads to more reported crimes, which reinforces bias in future predictions.

3. Healthcare

AI models used to predict disease risk or allocate medical resources have shown bias against minority patients. In one study, an algorithm used by U.S. hospitals was less likely to recommend additional care for Black patients with the same health conditions as white patients.

4. Financial Services

Credit scoring systems powered by AI can perpetuate lending discrimination. If an algorithm learns from biased banking data, it may unfairly deny loans to applicants from certain demographics, even with similar financial profiles.

Each example reveals the same truth: AI mirrors society’s inequalities unless deliberately corrected.

The Importance of Transparency and Explainability

One of the biggest challenges in AI ethics is explainability—understanding why an algorithm made a certain decision.

When AI models are treated as black boxes, even developers may not fully understand their logic. This lack of transparency erodes trust, especially in fields like healthcare, law, or finance, where decisions carry high stakes.

Explainable AI (XAI) aims to fix this by making algorithms more interpretable. For example, it allows a doctor to understand why an AI suggested a certain treatment, or a loan officer to see why an applicant was denied credit.

Transparency builds trust. When users can see how and why AI systems work, they’re more likely to accept and benefit from them.

Addressing Algorithmic Bias: Strategies for Fairer AI

Building ethical, unbiased AI isn’t a single step—it’s an ongoing process involving technology, policy, and people. Here are the key strategies experts recommend:

1. Diverse Data Collection

Bias often begins with data. Ensuring that datasets represent diverse populations reduces the risk of skewed outcomes. This means including data from various genders, races, geographies, and socioeconomic backgrounds.

2. Algorithmic Auditing

Independent audits can identify and mitigate hidden biases. These audits assess how algorithms perform across demographic groups, flagging areas where decisions may disproportionately affect certain populations.

3. Human-in-the-Loop Systems

Keeping humans involved in critical decision-making adds ethical oversight. While AI can process information faster, humans can provide contextual understanding and moral judgment—something machines can’t replicate.

4. Ethical AI Design Principles

Organizations like Google, Microsoft, and IBM have established AI ethics frameworks focusing on fairness, transparency, and accountability. Embedding these principles from the start ensures ethical alignment throughout development.

5. Regulatory and Legal Oversight

Governments are beginning to introduce regulations that enforce ethical AI practices. The EU’s Artificial Intelligence Act, for example, classifies AI systems by risk level and imposes strict standards on high-risk applications.

The Role of AI Ethics Committees

Many organizations now form AI ethics committees—interdisciplinary groups of technologists, ethicists, lawyers, and social scientists—to review algorithms before deployment.

Their job is to ask hard questions:

- Is this technology fair?

- Does it respect user privacy?

- Could it cause unintended harm?

These committees don’t just prevent bias; they build accountability and public trust. By integrating ethical reviews into the AI lifecycle, companies can avoid reputational damage and legal risks while promoting responsible innovation.

Can AI Ever Be Truly Unbiased?

This is the question that drives the entire field of AI ethics—and the honest answer is: not completely.

Because AI learns from human-generated data, it will always reflect some level of human imperfection. The goal, therefore, isn’t to create perfectly neutral systems but to minimize harm and maximize fairness through constant monitoring and improvement.

It’s like steering a ship through choppy waters. You can’t control the waves, but you can adjust your course to stay balanced. The same applies to AI ethics—continuous adjustment ensures the technology stays on track toward fairness.

The Future of Ethical AI

The future of AI ethics and algorithmic bias lies in collaboration—between technologists, policymakers, and the public. As AI becomes more powerful, society must decide not just what it can do but what it should do.

Emerging technologies like federated learning, differential privacy, and explainable AI are already addressing bias at technical levels. But ethics isn’t purely technical—it’s cultural. It requires education, awareness, and empathy from everyone involved in designing intelligent systems.

Ultimately, the goal is to ensure AI serves humanity—not the other way around.

Conclusion

AI ethics and algorithmic bias aren’t abstract concepts—they’re the invisible forces shaping how technology impacts our lives. By understanding them, we can build a future where artificial intelligence enhances human potential without reinforcing inequality.

The road to ethical AI isn’t easy. It demands vigilance, diversity, and transparency. But if we commit to fairness and accountability, we can transform AI from a mirror of our biases into a tool for justice, equality, and progress.

FAQ

1. What is AI ethics?

AI ethics is a set of moral principles guiding the development and use of artificial intelligence to ensure fairness, transparency, and accountability.

2. What causes algorithmic bias?

Algorithmic bias occurs when AI systems learn from biased or unrepresentative data, leading to unfair or discriminatory outcomes.

3. How can we reduce bias in AI?

By diversifying datasets, conducting algorithmic audits, maintaining human oversight, and following ethical design principles throughout development.

4. Why is transparency important in AI?

Transparency builds trust and helps users understand how AI systems make decisions, allowing accountability for errors or bias.

5. Can AI ever be completely unbiased?

Not entirely. Since AI learns from human data, some bias is inevitable—but it can be minimized through constant monitoring and ethical practices.