Introduction

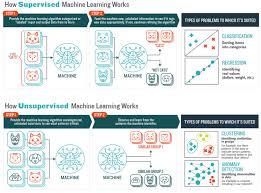

Machine learning has transformed numerous industries by enabling computers to learn from data and make intelligent decisions. The foundation of any ML model is the data it learns from, and properly annotating this data is critical to its success machine learning data notation refers to the systematic labeling and structuring of data to ensure that algorithms can effectively learn and generalize patterns.

In this article, we will explore the essential concepts behind machine learning data notation, including types of data, common annotation techniques, tools, challenges, and best practices.

Understanding Data in Machine Learning

machine learning data notation typically falls into three primary categories:

- Structured Data: This type of data is organized in a well-defined manner, often in tabular form. Examples include numerical and categorical datasets found in spreadsheets and relational databases.

- Unstructured Data: This consists of information without a predefined format, such as text, images, audio, and video.

- Semi-Structured Data: This data does not conform strictly to a tabular format but contains metadata or tags that provide some structure, like JSON or XML files.

Importance of Data Notation

machine learning data notation plays a crucial role in supervised learning, where labeled examples train models to make predictions. The quality of these annotations directly affects model accuracy. Well-annotated data helps:

- Improve model performance by providing clear learning patterns.

- Reduce bias and ensure fairness in decision-making.

- Enable interpretability and debugging of models.

Types of Data Annotation

1. Text Annotation

Text annotation is used for natural language processing tasks, such as sentiment analysis, named entity recognition, machine learning data notation and machine translation. Some common techniques include:

- Entity Recognition: Identifying and labeling names, locations, and organizations within a text.

- Part-of-Speech (POS) Tagging: Assigning grammatical categories (e.g., noun, verb, adjective) to words.

- Sentiment Annotation: Categorizing text based on emotional tone (positive, negative, neutral).

- Intent Annotation: Labeling text to identify user intent, often used in chatbots and virtual assistants.

2. Image Annotation

Image annotation is essential for computer vision tasks like object detection and facial recognition. Techniques include:

- Bounding Boxes: Drawing rectangles around objects of interest.

- Segmentation: Identifying each pixel that belongs to an object.

- Landmark Annotation: Marking specific points in an image, such as facial key points.

- Classification Labeling: Assigning categories to entire images (e.g., cat vs. dog).

3. Audio Annotation

Audio annotation is crucial for applications like speech recognition and sound classification. Common annotation methods include:

- Speech Transcription: Converting spoken words into text.

- Speaker Identification: Labeling audio segments with speaker names.

- Emotion Detection: Classifying voice recordings based on emotional tone.

- Background Noise Annotation: Identifying and categorizing different types of background noise.

4. Video Annotation

Video annotation extends image annotation techniques across time-series data. Key approaches include:

- Object Tracking: Tracking moving objects frame by frame.

- Action Recognition: Labeling specific actions in a video (e.g., running, jumping, sitting).

- Scene Description: Providing contextual information about a video clip.

- Event Detection: Identifying key events, such as accidents in surveillance footage.

Tools for Data Notation

Several tools facilitate the data annotation process of machine learning Some popular choices include:

- LabelImg (for image annotation)

- SuperAnnotate (for video and image labeling)

- Prodigy (for text annotation)

- Labelbox (for large-scale annotation projects)

- Amazon SageMaker Ground Truth (for scalable data labeling)

- Google Cloud AutoML (for automated annotation)

Challenges in Data Notation

Despite its importance, data annotation comes with several challenges:

- Cost and Time: Manual annotation is labor-intensive and expensive.

- Human Bias: Subjective labeling can introduce biases that affect model performance.

- Scalability: Large datasets require scalable annotation strategies.

- Data Privacy: Sensitive data must be handled carefully to ensure compliance with regulations like GDPR and CCPA.

- Annotation Consistency: Maintaining uniform labeling standards across annotators is difficult.

Best Practices for Effective Data Notation

To ensure high-quality annotations, consider the following best practices:

- Define Clear Annotation Guidelines: Establish detailed instructions for annotators to follow.

- Use Multiple Annotators: Reduce bias by having multiple individuals label the same dataset and resolve discrepancies.

- Automate Where Possible: Leverage AI assisted annotation tools to reduce manual effort.

- Conduct Quality Assurance (QA) Checks: Regularly audit annotations for accuracy and consistency.

- Balance the Dataset: Avoid bias by ensuring a diverse representation of data samples.

- Update Annotations Over Time: As models evolve, periodically update labeled datasets to maintain relevance.

Conclusion

machine learning data notation is a fundamental step in developing accurate and reliable machine learning models. Whether working with text, images, audio, or video, proper annotation ensures better model training and improved real-world performance. While annotation can be time-consuming and expensive, leveraging the right tools and best practices can make the process more efficient. As machine learning continues to evolve, the demand for high-quality annotated data will only increase, making effective data notation an essential skill in the AI landscape.

Leave feedback about this