In the age of intelligent applications and seamless API integrations, the combination of OpenAPI and artificial intelligence (AI) engines has paved the way for more robust, interoperable, and scalable systems. Whether you are developing chatbots, automating customer service, or integrating machine learning capabilities into your business processes, understanding how to use an OpenAPI key within an AI engine is essential.

This guide will explore the concepts behind OpenAPI and AI engines, and walk you through the process of securely using an OpenAPI key to integrate external APIs into AI-powered systems.

Understanding the Basics

What Is OpenAPI?

OpenAPI is a specification for building APIs in a standardized, language-agnostic way how do you use openapi key in ai engine Initially developed as Swagger and now maintained by the OpenAPI Initiative, OpenAPI allows developers to describe RESTful APIs in a human- and machine-readable format using file.

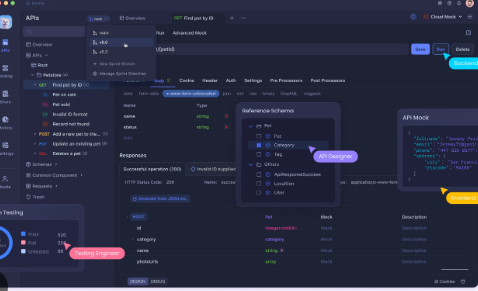

OpenAPI specifications define the structure of requests and responses, including endpoints, methods, parameters, request bodies, response formats, and security schemes. This makes it easier to document, test, and interact with APIs, often using tools like Swagger UI or Postman.

What Is an AI Engine?

An AI engine is a software component or platform that processes data using artificial intelligence algorithms to deliver insights, automation, or interactivity. AI engines can include natural language processing (NLP), machine learning (ML), computer vision, speech recognition, and decision-making systems.

Common AI engines include:

What Is an OpenAPI Key?

An OpenAPI key is an access credential used to authenticate API requests how do you use openapi key in

engine It acts like a password between your application and an external API provider how do you use openapi key in ai engine When integrated into an AI engine, the OpenAPI key ensures only authorized applications can access the API, typically enabling monitoring, usage limits, and billing.

In most cases:

- The API key is passed in the HTTP request header or query string.

- It is linked to a developer account or organization.

- It can be rotated or revoked for security.

Why Use OpenAPI with an AI Engine?

Combining OpenAPI with AI engines offers several advantages:

How Do You Use OpenAPI Key in AI Engine?

Let’s break down the step-by-step process of using an OpenAPI key in an AI engine:

1. Select and Register for an API

Before you can use an API key, you need access to an API that uses OpenAPI and provides a key-based authentication mechanism.

2. Understand the OpenAPI Specification

If the API is described using OpenAPI, review the file that defines th

3. Integrate the API into Your AI Engine

This depends on the type of AI engine you are using. Let’s look at some common scenarios:

a. Using OpenAI GPT with External APIs

You may want to use OpenAI’s GPT engine to call a third-party API. You can accomplish this with functions or tools like:

- Function calling (OpenAI GPT-4): You define a function schema that describes an API endpoint.

- External webhook or server: Your GPT agent can call a webhook that This function can be connected to a GPT agent that dynamically calls it based on user input.

b. Calling APIs from LangChain Agents is a popular framework for AI agents that supports OpenAPI integration. You can use to call API endpoints using an OpenAPI spec.The AI agent can now autonomously choose which endpoints to call based on the OpenAPI definition and respond to users accordingly.

4. Secure Your API Key

Never hard-code your API key in production code or expose it in public repositories. Instead:

- Store it in secret managers (e.g., AWS Secrets Manager, Google Secret Manager).

- Use token rotation to regularly update API keys.

5. Handle Errors and Rate Limits

Most APIs have rate limits and error codes. Your AI engine should be able to handle:

- HTTP 429 (Too Many Requests): Backoff and retry after some delay.

- HTTP 401/403: Check if your API key is valid and authorized.

- Timeouts or Failures: Provide fallback mechanisms (e.g., cached responses).

Example retry logic in Python:

6. Log and Monitor Usage

Most API providers offer a dashboard to monitor API usage. On your end:

- Log requests/responses for auditing.

- Monitor latency, failure rates, and usage limits.

- Alert if API key is compromised or overused.

For enterprise-level applications, consider integrating observability tools like Datadog or Prometheus to monitor API activity.

7. Testing Your AI Integration

Use tools like:

- Swagger UI to test OpenAPI endpoints.

- Postman to send requests with your API key.

- Unit tests and mocking for automated testing of AI engine behavior under different API responses.

Real-World Use Cases

1. AI Chatbot with Real-Time Data

A GPT-based chatbot using OpenAPI to fetch weather, news, or stock prices in real-time.

2. Healthcare AI Assistant

AI engine integrated with medical APIs (like DrugBank or ClinicalTrials.gov) using API keys for access.

3. Financial AI Tool

A financial model using OpenAPI data from market APIs like Alpha Vantage or Yahoo Finance, secured via API keys.

Conclusion

Using an OpenAPI key in an AI engine bridges the gap between structured external data and intelligent decision-making. With a well-documented OpenAPI specification, secure key management, and intelligent error handling how do you use openapi key in ai engine AI systems can deliver accurate, real-time results by leveraging external APIs.

Whether you’re building a chatbot, virtual assistant, or enterprise analytics tool, mastering the integration of OpenAPI keys with your AI engine unlocks immense potential. Just remember to prioritize security monitoring, and scalability in your implementations to ensure long-term success.

Leave feedback about this